Why “Best Practices” Can Quietly Kill Your Marketing Edge

When following the herd becomes dangerous.

If you’re at the bottom of the funnel, choosing partners, budgets, and tactics, you’ll hear a chorus: “Use best practices.” It sounds safe. It reduces debate. It reassures stakeholders. But here’s the catch: best practices optimize for average. They rarely create an advantage. And in competitive markets, the average gets invisible fast. Best practices are table stakes, not differentiators

“Best practice” usually means a tactic has become widely adopted and de-risked: standard ICP (Ideal Customer Profile) templates, predictable nurture cadences, gated whitepapers, intent-based ads lookalikes, and a familiar ABM playbook. You need these to avoid obvious mistakes, but they won’t help you win tight deals or create outsized efficiency. They compress everyone toward the same channels, the same timing, the same offers… and the same rising CAC (Customer Acquisition Cost).

Think of best practices as hygiene. Brush your teeth - yes. But brushing harder won’t win you a marathon.

History’s reminder: winners outgrow the “known good”

A few familiar examples show how “the safe way” stops being safe:

Kodak’s film playbook was flawless. Yet it delayed going digital even after inventing it, and that loyalty to the status quo dulled its advantage.

Nokia led by iterating hardware best practices; Apple reframed the game around software ecosystems and developer leverage.

The pattern is the same in marketing: when the game changes, optimizing yesterday’s playbook makes you faster at losing.

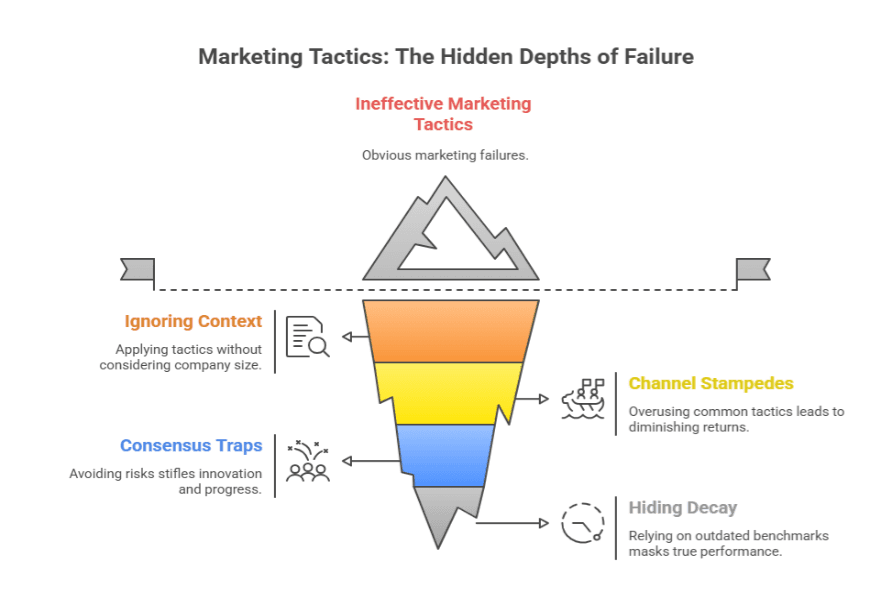

Four ways best practices quietly erode your edge

They ignore context. A tactic that worked at a $500M ARR vendor with long brand memory may fail for a $10M challenger with a 6-month runway.

They create channel stampedes. Once a tactic is common knowledge, costs rise and response falls. You pay more to look like everyone else.

They trap you in consensus. “No one gets fired for buying IBM” thinking kills the experiments that produce step-changes in pipeline efficiency.

They hide decay. Benchmarks lag. By the time something is published as “best,” early movers have already moved on.

Use best practices, then deliberately break them

Here’s a practical way to keep the hygiene and regain the advantage.

Separate table stakes from edge bets

Table stakes (the must-do basics)

These are the hygiene items that stop you from losing customers before you even start. Think of them like keeping your shop clean and the door easy to open. A fast website (no waiting), clean data (names, emails, and numbers that are correct), clear message–market fit (you’re solving a real problem for the right people), tidy CRM (no duplicate leads), a clear ICP (you know exactly who you sell to), baseline reporting (you can see what’s working), and an SLA with Sales (simple rules for how and when marketing hands leads to sales).

Why they matter, but won’t make you famous: Table stakes cut friction and errors, saving money and building trust. But they’re copyable maintenance, not a moat. Get them right so nothing breaks, then find your edge elsewhere.

Edge bets (the smart, small rule-breaks)

These are deliberate experiments that make you stand out. They’re moves that go against the usual playbook like ungating your best guide, focusing deeply on 20 dream accounts instead of 500, offering a 90-day pilot that proves ROI, or showing the product first and pitching later. The goal is to create an unfair advantage: more qualified pipeline, faster deals, higher win rates.

How to run them safely: Keep edge bets small and measurable one audience, one offer, one channel. Define success upfront (e.g., 2× qualified pipeline), protect a tiny budget, then scale winners fast and kill losers faster.

Design edge bets with guardrails

Contrarian hypothesis:

If competitors hide their best content behind forms, we’ll ungate it and capture intent inside the product. Let buyers try the value first, then qualify by behavior (features used, depth of engagement) and fit routing high-intent users as Product-Qualified Leads (PQLs) to Sales.

Smallest viable test:

Run a 30-day sprint with one tight segment, one clear offer, and one channel so the only variable is “ungated + in-product qualification.” Instrument the funnel end-to-end to compare against your current gated motion.

Kill/scale criteria:

Set the yardstick before launch, e.g., qualified pipeline per impression (not CTR), plus guardrails for CAC and opt quality. If it beats the threshold, scale; if not, kill fast, document why, and move to the next variant.

Measure differentiation, not vanity

Track share of voice within your ICP, pipeline velocity, sales cycle compression, win rate by segment, % deals influenced by new motions not just MQLs or blended CPC.

Build a ‘forbidden experiments’ backlog

Once a quarter, run 3–5 tests that break an accepted rule. A few examples below.

Five “break-the-herd” experiments for B2B marketers

No-Form Thought Leadership

Ungate your best asset and put the form after value: invite to a live teardown, tool, or calculator. Pair with progressive profiling in product or chat.

Why it works: Trust first, data second. You’ll filter in the right buyers by depth of interaction, not a PDF download.

Sparse, High-Intent ABM

Instead of chasing 500 “target accounts,” pick 20 super-fit accounts and craft bespoke content with them (peer roundtables, joint field notes).

Why it works: Depth beats breadth. Sales cycles shorten when buyers co-create the solution narrative.

Offer Redesign Around Friction

Audit the last 10 losses. Turn the top objection into your offer (e.g., “90-day pilot with in-product ROI tracking” instead of another demo CTA).

Why it works: You compete on risk removal, not louder claims.

Channel Inversion

If everyone fights on LinkedIn, divert 20% of spend to niche communities, industry Slack groups, partner newsletters, or technical docs SEO.

Why it works: You trade CPM scale for contextual trust and often, higher intent.

Sales-Assisted Product-Led Growth for Enterprise

Launch a constrained, real-use free tier for one high-value workflow, backed by a human success concierge for target accounts.

Why it works: Experience beats explanation. Buyers feel value before procurement enters.

Quick diagnostic: Is the herd dulling your edge?

Are most of your ideas a response to a benchmark rather than a buyer insight?

Do your dashboards celebrate volume over velocity and conversion quality?

Could a competitor swap logos on your website and campaigns and no one would notice?

When was the last time you killed a “best practice” because a test disproved it?

If these sting, you’re probably over-indexed on the familiar.

QA short, real-world posture shift

Two similar SaaS firms used the same standard marketing: finding interested buyers, promoting content on other sites, and having sales reps reach out. One company added a quarterly “Edge Sprint” a 4-week period to try one bold experiment at a time.

Examples: the founder doing live product teardowns, adding practical calculators inside the product, or writing point-of-view pieces with customers.

They didn’t stop doing the basics (fast site, clean data, good handoff to sales). They just layered smart, small rule-breaks on top.

Result: Better-quality leads, faster deal cycles, and more wins, because they were different, not because they did more of the same.

Takeaway: Keep your basics clean, but set aside time each quarter to test a few brave ideas. The edge comes from the well-designed exceptions.

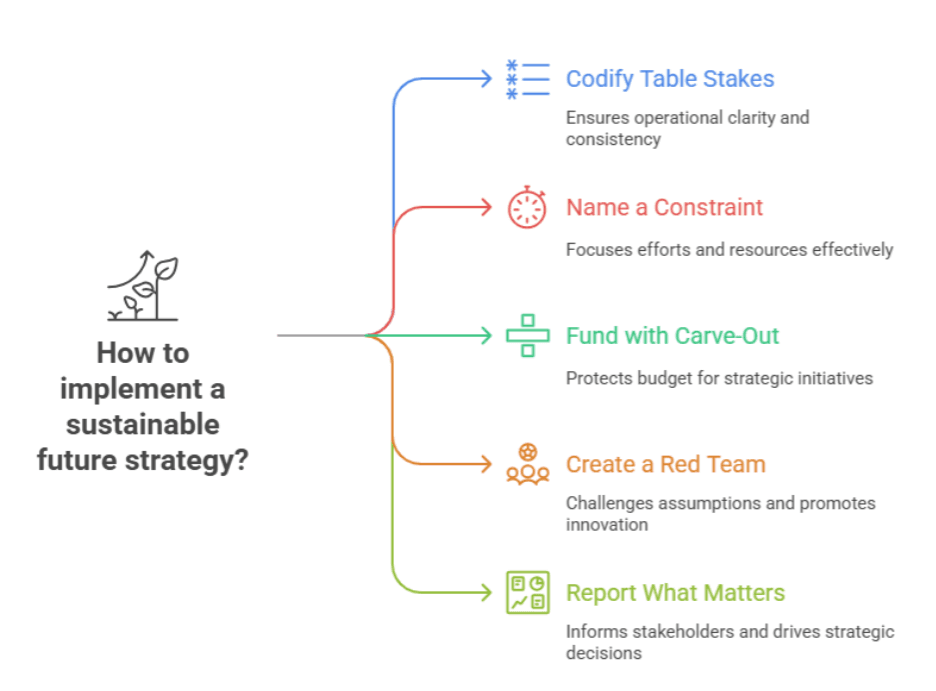

Implementation checklist

Codify table stakes: document non-negotiables that keep ops clean.

Name a constraint: We’ll run 3 edge bets per quarter, each with pre-agreed kill/scale rules.

Fund with a carve-out: protect 10–15% of program spend from being reabsorbed by BAU.

Create a red team: one marketer and one seller tasked to challenge any “because it’s best practice” reasoning.

Report what matters: show executive stakeholders the opportunity cost of sameness (rising CAC, flat win rates) and the impact of edge bets on pipeline quality.

The thought to leave you with

Best practices keep the lights on. Next practices win markets. When everyone optimizes the same checklist, your edge lives in the well-designed exception the rule you can justify breaking because you understand your buyer better than your benchmark.

If you’re about to hire a partner or approve a plan, ask one question: “Where, specifically, will we break the playbook and how will we know if it worked?” That’s where advantage begins.